Tele

Empathy

Tele Empathy

As the title implies, the main subject of this work is empathetic telepathy. The main concept is a performance leveraging Inter-Brain Synchronisation (IBS) between two minds.

In theory, high levels of IBS can be seen during social human interaction, especially in music or art co-creation contexts. This project has specifically developed technical solutions for decoding and mapping multiple streams of complex EEG data to visual and musical outputs representing IBS in real time.

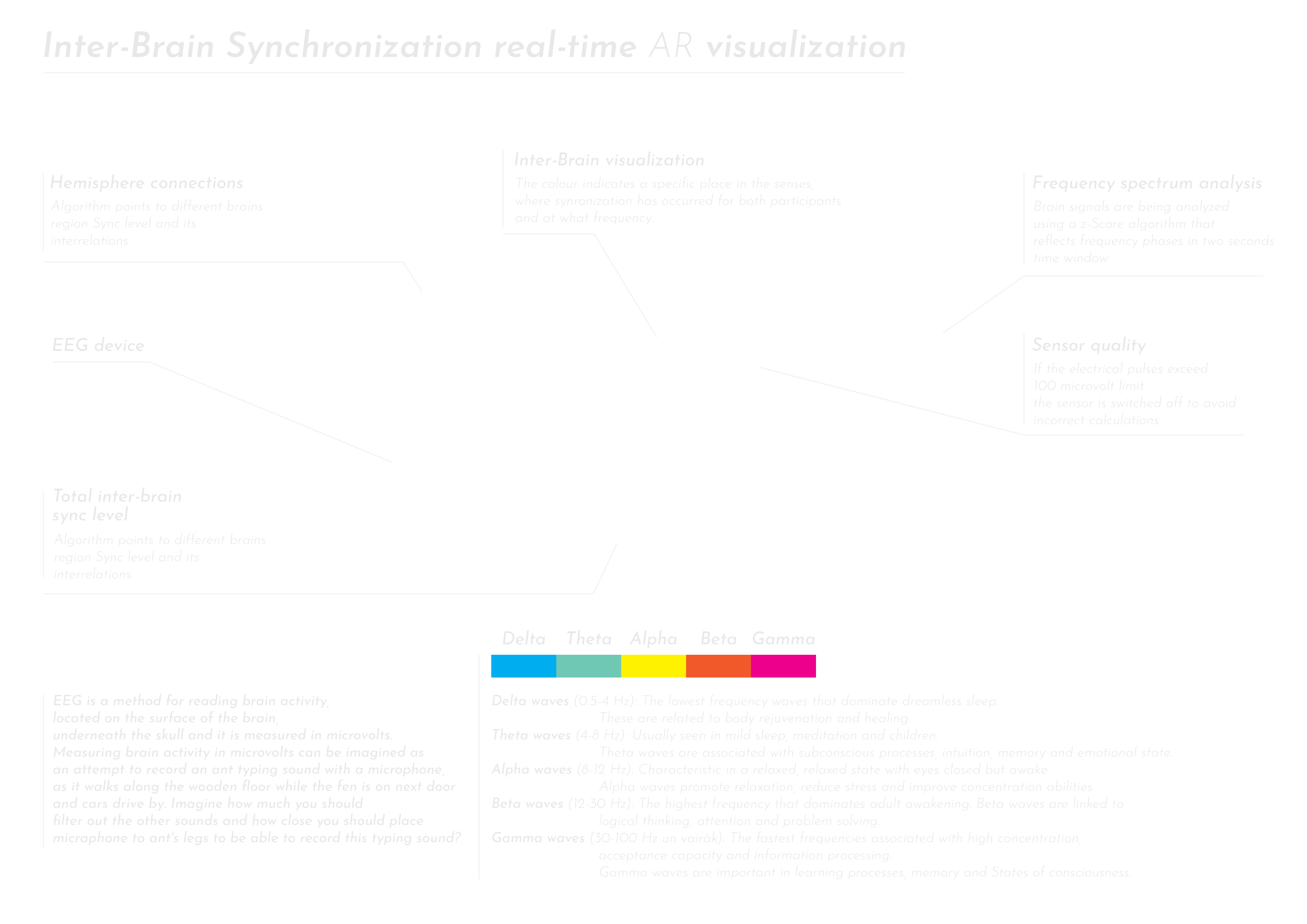

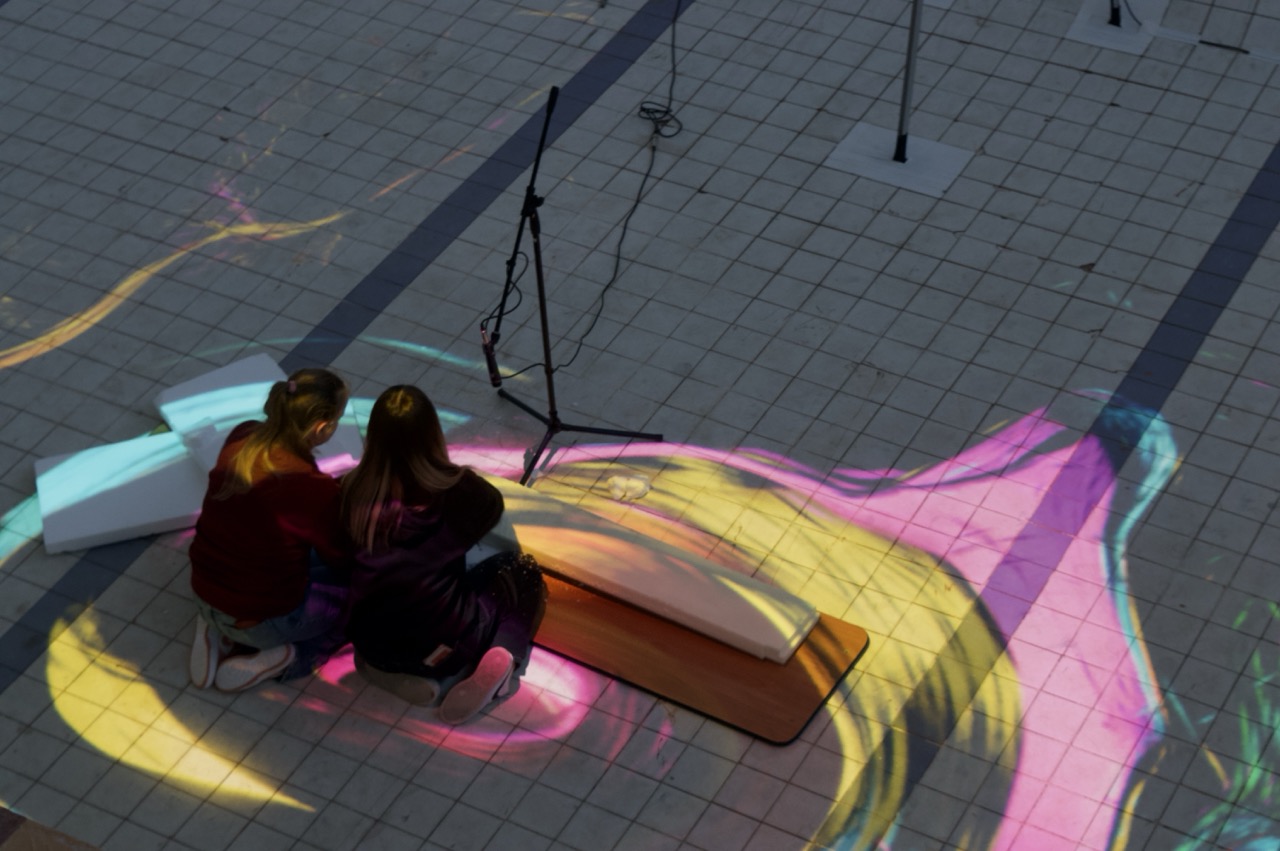

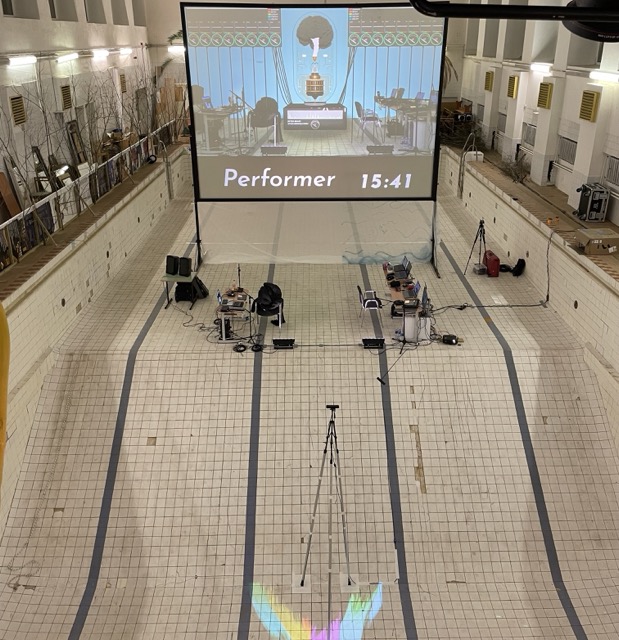

In our approach, two performers wear electroencephalography (EEG) electrode caps. Their brain activity, and levels of IBS are visualised to the audience in the form of coloured lighting inside a 3D brain, projected in Augmented Reality, behind the performers. The EEG signal is also musified, by directly mapping ongoing IBS metrics to musical parameters and instruments played by the performers.

The main point of focus is to observe inter-brain dynamics during live performance. As IBS modulates in response to other external stimuli in the environment, it is also possible to observe shared responses to audiences, or other performances and artworks in the space.

This performance reveals previously-hidden dynamics of shared human thought and emotion. In other words, it creates a neurofeedback loop in the form of interactive visual and musical art, driven by the interaction between two connected minds.

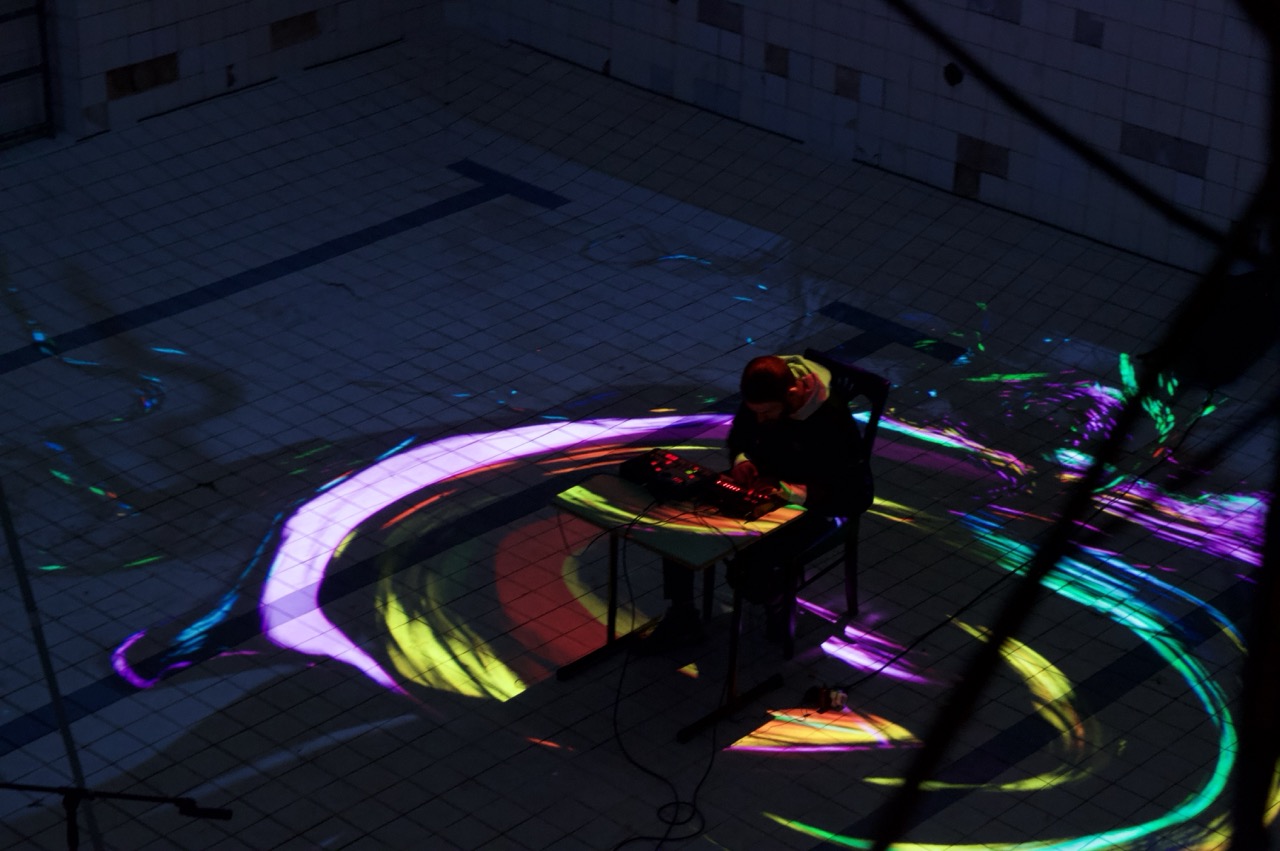

This project was technically challenging because multiple software and hardware components must operate simultaneously in real-time. Machines must simultaneously capture and share data across all connected devices, perform real-time analysis, output data to audio engines, and visualize complex data using GPU particles.

We capture raw EEG data and analyze it using Python - the most robust and versatile tool for connectivity and scientific analysis. In this scenario, we employ z-Score analysis to examine frequency band shape features. By comparing phase band shapes, we can identify similarities and understand how brain regions interact within a single user. We apply this technique to all bands at the same frequency across all electrodes while also analyzing inter-brain synchronization to determine where patterns occur. By utilizing the power of LSL Lab Streaming Layers, we enable seamless sharing of raw and processed data across our local ethernet network. This empowers us to work together towards our goal.

Unreal Engine UE5 plays a significant role in our project. All visuals are created using Unreal Engine. With the help of the LSL plugin for UE5, we are able to receive LSL data streams, apply logic, and visualize them in various ways. Unreal Engine has a built-in GPU-based particle system that can render hundreds of thousands of particles in one eyeblink. This is exactly what we are looking for when we want to visualize complex data, and EEG data can be extremely complex easily. Did you know that there is a Python plugin for Unreal Engine that can run in runtime? Check out the Yothon plugin for UE5. Currently, it does basic tasks, but in the future, it promises multi-threading and more.

Let's take a look at the back screen that displays the inter-brain synchronization. We are overlaying a graphic on top of the camera feed, which can be achieved in various ways. We tried out the ZED stereo camera, which provides a color image and depth image, and tracked, segmented bodies with a skeleton.

Working with the ZED stereo camera is not easy. Camera initialization can be complex, and body tracking can take some time. If you need to track a specific number of users, this feature needs to be implemented manually. Currently, it is not possible to segment only human bodies; it uses the depth image, and segmentation is done precisely in centimeters. While precision is nice, your physical space needs to be large enough for the composed graphics to not overlap with walls and objects. It would be a nice feature to be able to segment only the human body and move the camera feed somewhere further away so that all the graphics can be in between.

The particles that you can see in the swimming pool were created using UE5 Niagara particle system. We designed custom vector fields in Houdini. The vector field works as the path for a particle; particles are guided through this 3D arrow map that whirlpools into the center. This gives us an artistic and visually pleasing output while also helping us understand inter-brain synchronization. Each color represents a specific frequency band, and we can see how long the synchronization occurs over time.

The visualizations that you see on the screen behind us and on the floor are running from separate computers. We use LSL layers to ensure that data synchronization happens seamlessly between multiple machines.

The Unreal Engine is a great tool that now supports OSC Open Sound Control, Midi, and ArtNet DMX protocol. This makes it ideal for running all the gear on the stage. We are using OSC and Midi to share analyzed data with other machines and control sound parameters in Logic Pro and Ableton Live. In addition, DMX is the industry standard for driving light colors, positions, animations, and other effects for fixtures. Although we have found that there may be room for improvement in how we utilize DMX, we are confident that we can make the most out of this powerful technology.

"Together with 27 fellow performance artists, we have created a unique performance that is also an experiment in exploring the depths of inter-brain synchronization. By pushing our boundaries and discovering what triggers synchronization, we hope to inspire a new wave of creativity and innovation. We believe that through collaboration and experimentation, we can unlock the full potential of our minds and achieve greatness beyond our wildest dreams."

Thank you to all participants for contributing to our success. Your participation has been invaluable, and we appreciate your time, effort, and artistic input. Once again, thank you for your participation.

Anna Segliņa, Elza Jančuka, Rūta Krūskopa, Alise Elizabete Ipatova, Evelīna Zariņa, Adele Atlāce , Toms Baldonis , Elza Jančuka ,Alise Jaunkalne, Amēlija Luīze Lepse , Estere Lutce , Enija Pastva , Adrija Plūme, Elza Riekstiņa, Patrīcija Sokolovska, Dr. Agnese Dāvidsone, Lāsma Brūvere, Līva Rēzija Vanaga Kerija Mugina, Denīze Ozola-Kumeliņa, Frīda, Lācēns - Braiens Brauķis, Lāču mamma - Lāsma Bērziņa, Lāču tētis -Ernests Ozoliņš, Pelīte - Annija Kate Balode, Dzenis - Elīza Zvirbule, Briedis - Annija Kate Balode, Zaķis - Kate Zvārgule, Lapsiņa - Estere Bāliņa, Vadītāja - Anna Paula Gruzdiņa, Sigita Skrabe, Melānija Fimbauere, Lizete Bērziņa, Loreta Šperliņa-Priedīte, Ieva Freimane-Mihailova, Anna, Liene Brokāne, Samirs Laurs un Tomass Alksnītis, Andris Taurītis, Braiens Brauķis, Lāsma Bērziņa, Ernests Ozoliņš, Skarleta Kalniņa, Elīza Zvirbule, Annija Kate Balode, Estere Bāliņa, Anna Paula Gruzdiņa, Amēlija Eglīte, Lote Mincāne, Kate Jursone, Kristiāna Putniņa, Toms Treimanis, Rūdolfs Segliņš, Jurģis Segliņš, Adrians Gerasimovs, Niks Judenkovs, Elīza Petruseviča, Artūrs Eglītis, Elija Stolere, Vokālais ansamblis "IMERA"

About artists

Jachin Pousson

Jachin is an active composer and musician appearing in numerous constellations in Latvia and abroad. Over the last 20 years he has published original works in the veins of academic, free jazz, electroacoustic, post rock, electronic, folk and metal genres. He is also a guest lecturer of Music Psychology and Music Physiology, and a member of the scientific research team (ZPC) at JVLMA. The main focus of his research work is Brain-Computer Music Interface (BCMI) system design for Embodied Music Interaction applications.

Mārtiņš Dāboliņš

I am following my passion – audio-visual art, with a particular emphasis on interactive video scenography. Over the past two decades, I have had the pleasure of providing these services for a diverse range of events, including corporate events, concerts, and performances. In order to remain current and innovative, I am constantly researching and studying. I enjoy experimenting with technologies and art to explore new directions and find unique, creative solutions.